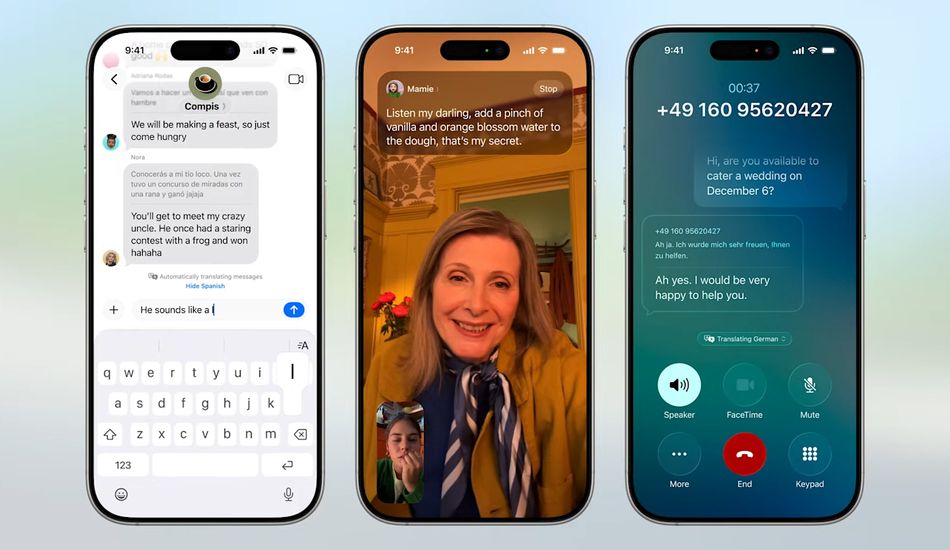

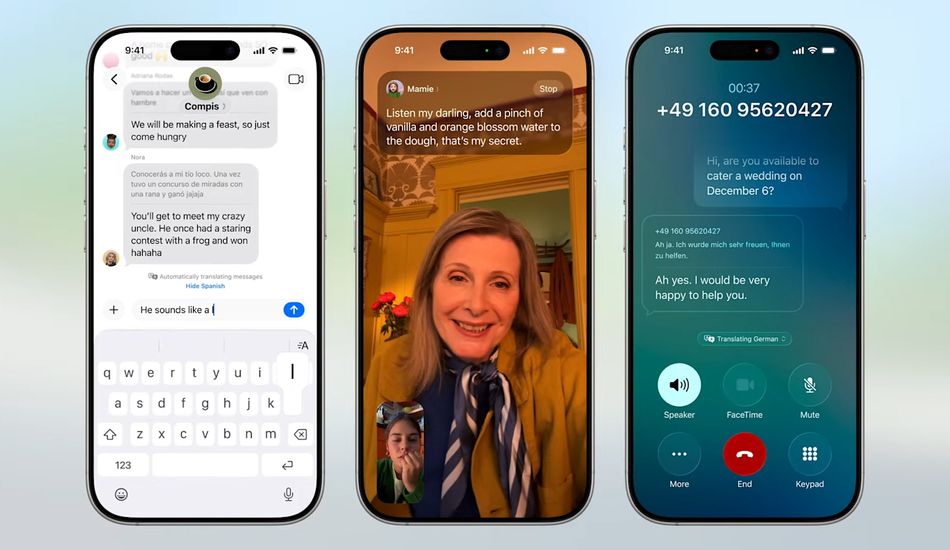

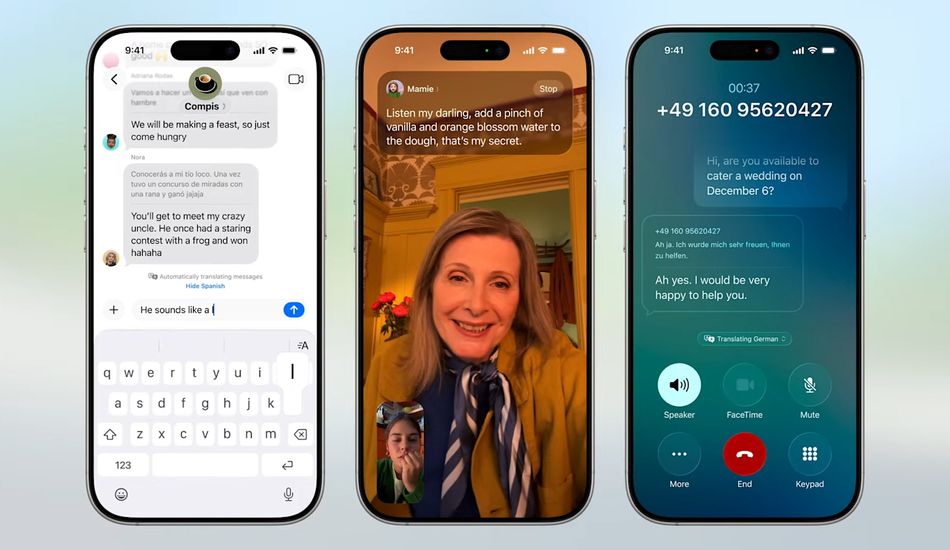

Apple Introduces AI Live Translation

Apple's Worldwide Developers Conference (WWDC) 2025 showcased significant advancements in its upcoming iOS and iPadOS 26 updates, with a major focus on AI-powered live translation. This feature seamlessly integrates into core applications like Messages, Phone, and FaceTime, offering real-time translation for calls and text messages.

During calls, translations will be delivered via an AI voice and displayed as captions. Text messages will see automatic translation as users type, even across platforms, extending beyond the Apple ecosystem. While specifics on supported languages are pending, this capability represents a considerable leap forward in communication accessibility.

Expanding Accessibility

The integration extends to iPadOS 26, mirroring the functionality on iPhones. Group chats on iPads will also benefit from automatic translation. Furthermore, the Apple Watch Series 9, Series 10, and Ultra 2 will support live translation via Messages when paired with a compatible iPhone.

This development builds upon earlier efforts such as Siri's translation features and the dedicated Translate app. However, iOS 26 represents a more profound integration, fully leveraging Apple Intelligence to create a more holistic and intuitive experience.

Future Directions

While the current focus is software, speculation surrounding live translation capabilities for AirPods remains. Apple's current offering lags behind competitors like Google, who already provide similar features on their Pixel Buds. Details regarding this potential advancement are likely to emerge during Apple's fall hardware-focused keynote.

1 Image of AI Live Translation:

Source: Engadget