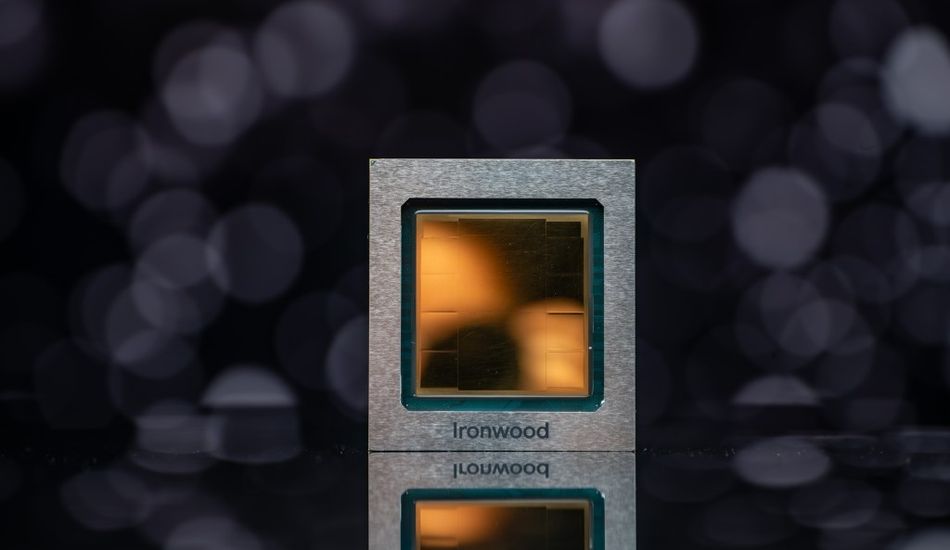

Google's Ironwood: Optimized AI Inference for Cloud Customers

Google has just unveiled its **seventh-generation** TPU, named Ironwood, at the Cloud Next conference. This new AI accelerator chip is primarily designed and optimized for **AI inference**, meaning running already trained AI models efficiently. This launch places Google firmly in the increasingly competitive AI accelerator market.

Ironwood: Power and Performance

Expected to be available for Google Cloud customers later this year, Ironwood will be offered in two configurations: a **256-chip cluster** and a massive **9,216-chip cluster**. According to Google Cloud VP Amin Vahdat, Ironwood is their "most powerful, capable, and energy-efficient TPU yet," specifically engineered to power **inferential AI models** at scale.

With competition intensifying from companies like Nvidia, Amazon, and Microsoft, Google's move to improve its AI hardware is crucial. Amazon offers Trainium, Inferentia, and Graviton processors via AWS, while Microsoft provides Azure instances powered by its Cobalt 100 AI chip.

Google's internal benchmarks indicate that Ironwood can achieve a peak of **4,614 TFLOPs** of computing power. Each chip boasts **192GB of dedicated RAM** with a bandwidth of approximately 7.4 Tbps, ensuring rapid data access.

Specialized Core and Integration

Ironwood features an enhanced specialized core called **SparseCore**, designed to efficiently process data common in advanced ranking and recommendation systems. This core excels at tasks like suggesting products to users. The architecture minimizes data movement and latency, resulting in significant **power savings**, according to Google.

Google intends to integrate Ironwood into its **AI Hypercomputer**, a modular computing cluster within Google Cloud. This integration promises to further enhance the performance and scalability of AI workloads.

Vahdat concludes that Ironwood signifies a "unique breakthrough in the age of inference," offering increased computational power, memory capacity, networking advancements, and improved reliability.

1 Image of AI Inference:

Source: TechCrunch